AI Singapore makes AI more inclusive for Southeast Asia with Gemma 2

Launched in 2017, AI Singapore is a national network of AI research institutions and organizations dedicated to advancing Singapore’s AI development. One of its projects, SEA-LION, is a family of open models that brings the power of LLMs to Southeast Asian (SEA) countries previously overlooked by the world of AI.

The team behind SEA-LION chose Gemma, Google’s family of lightweight and efficient open models, for its vocabulary and linguistic understanding, as well as its size-to-performance ratio. With Gemma, SEA-LION developers created a powerful, efficient, and accessible LLM used by millions of people in the SEA region today.

The challenge

The SEA-LION team recognized that many of the languages spoken across the region weren’t represented by today’s most popular LLMs, which meant parts of the region and entire groups of people had little to no access to many of AI’s potential applications. The team also found that even when these mainstream LLMs did have a basic understanding of local SEA languages, the LLMs lacked comprehension of the linguistic and cultural differences known to native speakers.

As William Tjhi, the head of artificial intelligence at AI Singapore explains, most of the world's AI is built on Western and Eastern languages, meaning a lot can be lost in translation: “The global LLM landscape evolved around two bodies: the West Coast and China. These models reflect those worldviews based on data sets that train them and the languages that train them.”

“Gemma’s Tokenizer performs better when applied to the languages we have in our region. You can see that in the output. This greatly enhances the model performance when trained on SEA tokens, because the tokenizer is more optimal vs the tokenizer of other models.”

The solution

The SEA-LION team created an inclusive set of LLMs that accurately reflect the region’s nuances, contexts, and cultural diversity. To build a proper LLM with a true understanding of a whole new set of languages, the team needed diverse, high-quality training data, so they decided to collaborate with Google DeepMind & Research teams. They also worked with native speakers and linguists to filter out irrelevant data coming from sources like gambling content and advertisements, and to ensure accurate, natural-sounding translations.

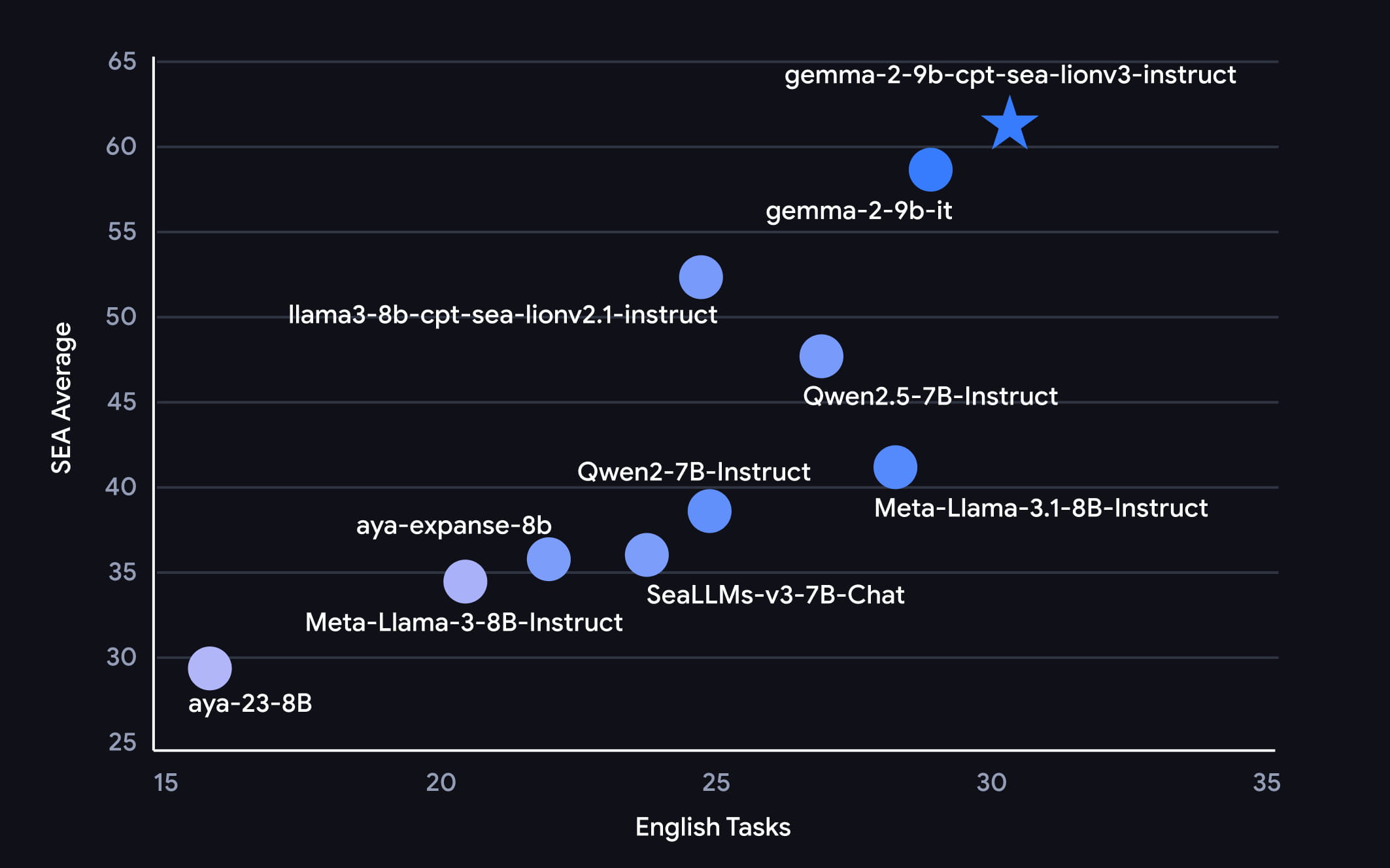

The team’s latest iteration, SEA-LION V3, was continuously pre-trained on Gemma 2, using 200 billion tokens of SEA data. The team found that Gemma’s tokenizer not only contained more tokens for the intended languages but also performed better than other models. Gemma’s 9 billion parameter version was chosen for its size and efficiency, as the resources required to run larger-scale models can be limited in many parts of the region.

The impact

SEA-LION V3 is the team’s most advanced iteration yet, and other local AI developers and researchers are already utilizing it. Tech company GoTo recently launched Sahabat-AI, an LLM ecosystem built on SEA-LION for Indonesian developers. Sahabat-AI is integrated into GoTo’s Dira AI voice assistant, allowing users to access both Gojek and GoPay payment services with voice commands in native languages and dialects.

GoTo CEO Patrick Walujo said that he expects Sahabat-AI to positively impact millions of lives in Indonesia: “It will help our businesses to communicate in new ways with customers, it will help our government ministries develop tools to engage with citizens more comprehensively.”

11

Southeast Asian language proficiencies

14k+

Downloads on Hugging Face

38M

Monthly active users on GoPay have access to Dira

What’s next

The team at AI Singapore is already planning its next iteration of SEA-LION. Their goal is to create smaller and larger parameter versions using Gemma, catering to a wider variety of use cases and offering local communities even greater flexibility. The success of SEA-LION has been essential to SEA’s AI boom, and other LLMs being built on it, like Sahabat-AI, are just the beginning.

“The launch of the new Gemma-based SEA-LION v3 with AI Singapore represents a major step forward for inclusive AI. By harnessing the power of Google’s Gemma 2, this new model significantly outperforms previous versions across a range of Southeast Asian evaluation metrics,” said Manish Gupta, senior director at Google DeepMind. “We look forward to the exciting applications this unlocks and the benefits it drives for diverse communities across Southeast Asia.”